Hey there, SEO enthusiasts and digital marketers! Are you ready to take a deep dive into the world of Google's ranking algorithms?

Thanks to a recent leak of internal API documentation, we now have unprecedented insights into the factors that influence search rankings and the potential risks that could lead to demotions.

This is a game-changer for anyone looking to stay ahead in the ever-evolving world of SEO!

Most Important Ranking Factors

Based on analyzing the definitions in the leaked document, some of the key takeaways regarding important Google ranking factors appear to be:

1. Page quality signals

There are various signals related to assessing the overall quality and authoritativeness of a page, such as NSRData (likely stands for Neural Salience of Results).

2. Content relevance

Relevance between the page content and search query seems to be evaluated based on factors like scoring mentions of entities, related entities, categories, etc.

3. Links and Anchor Text

There are extensive definitions related to anchor text, simplified anchors, link info between pages, etc. This suggests link analysis and anchor text relevance are important.

4. User interaction data

Things like click data, occurrence in query refinements, etc. are tracked and likely used as ranking signals.

5. Geographic relevance

There are signals for assigning geographic relevance to pages based on extracted entities, addresses, phone numbers, etc.

6. Freshness

Timestamps related to content updates, changes, indexing are tracked, likely to measure content freshness.

7. Spam and Low Quality Detection

There are models and classifiers referenced for detecting things like porn, spam, low-quality pages which may be demoted.

8. Rich results features

Support for extracting and annotating special content like recipes, FAQs, how-tos to potentially display as rich results.

9. Lexical Matching and Synonyms

The importance of keyword matching between query and page content and accounting for synonyms.

Key Takeaways from the Leak

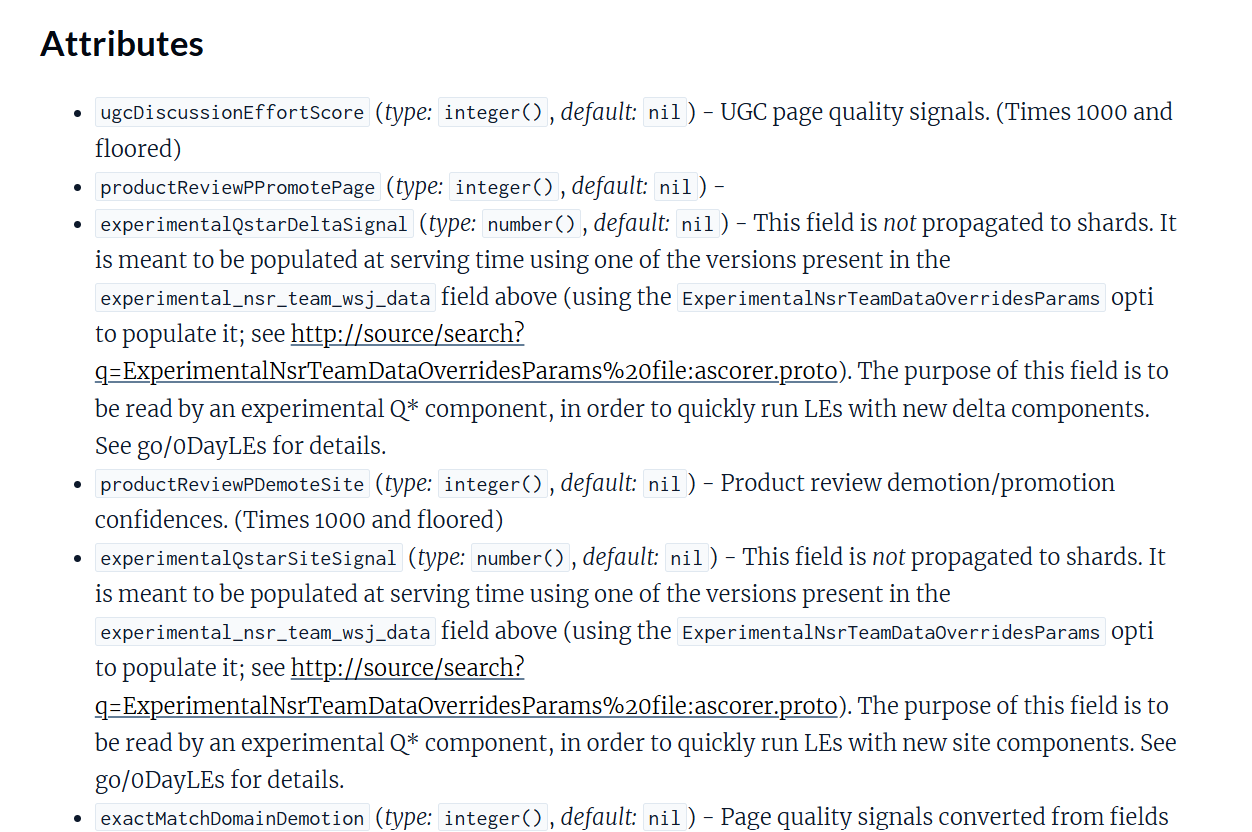

A screenshot of the leaked document

1. Vast Number of Ranking Features

The document outlines that there are over 14,000 ranking features spread across 2,596 modules. These features and modules relate to various aspects of Google's services, such as YouTube, Google Assistant, and web search.

2. Content and Link Signals

Extensive data about content, links, and user interactions are stored and analyzed to evaluate and rank web pages. This highlights the importance of high-quality content and robust link profiles.

3. Internal Ranking Systems

Several internal systems such as NavBoost, FreshnessTwiddler, and Mustang handle different aspects of ranking and re-ranking based on varied criteria. These systems help refine search results for better user experience.

4. Use of Site Authority

Despite Google's public denials, the documentation confirms the use of a "siteAuthority" metric. This domain-level authority impacts how content is ranked across the web.

5. Click Data Utilization

Systems like NavBoost leverage click data to adjust rankings. Metrics such as long clicks and the date of the last good click play a crucial role in determining a page's relevance.

6. Sandboxing Mechanism

The document confirms the existence of a sandbox mechanism where new or less trusted sites are segregated to prevent spam and ensure the integrity of search results.

7. Chrome Data in Rankings

Contrary to previous claims, Chrome data is indeed used in ranking calculations, especially in evaluating site-level metrics like views from Chrome.

8. Author Information

Google tracks author information and uses it to assess content quality. This aligns with the emphasis on E-A-T (Expertise, Authoritativeness, Trustworthiness).

9. Demotions for Various Factors

Several factors can lead to demotions, such as anchor mismatches, poor navigation practices, and low-quality product reviews. Understanding these demotions is crucial for maintaining or improving search rankings.

Site & Page Level Quality Signals

Based on the analysis of the leaked Google Content Warehouse API documentation, here are the site-level and page-level quality signals that were mentioned.

Site-Level Quality Signals

- siteAuthority: A measure of the overall authority of a domain, converted from quality_nsr.SiteAuthority and applied in Qstar.

- productReviewPDemoteSite and productReviewPPromoteSite: Product review demotion/promotion confidences at the site level.

- experimentalQstarSiteSignal: An experimental site-level signal meant for running Live Experiments (LEs) with new site components.

- pandaDemotion: Encoding of Panda fields from the SiteQualityFeatures proto, representing site-level quality based on the Panda algorithm.

- vlqNsr: NSR (Neural Salience of Results) score for low-quality videos at the site level.

Page-Level Quality Signals

- ugcDiscussionEffortScore: User-generated content (UGC) page quality signal.

- productReviewPPromotePage and productReviewPDemotePage: Product review promotion/demotion confidences at the page level.

- exactMatchDomainDemotion: Demotion signal for exact match domains.

- navDemotion: Navigation demotion signal.

- pqData and pqDataProto: Encoded and stripped page-level quality signals.

- babyPandaV2Demotion and babyPandaDemotion: New and old BabyPanda (=HCU) demotion signals applied on top of Panda.

- authorityPromotion: Authority promotion signal converted from QualityBoost.authority.boost.

- productReviewPUhqPage: The likelihood of a page being a high-quality review page.

- serpDemotion: Demotion signal based on appearance in low-quality search results pages (SERPs).

- anchorMismatchDemotion: Demotion signal for anchor text mismatches.

- experimentalQstarSignal and experimentalQstarDeltaSignal: Experimental page-level signals for running LEs with new components or delta components.

- scamness: Scam model score used as a web page quality signal in Qstar.

- unauthoritativeScore: Unauthoritative score used as a web page quality signal.

- productReviewPReviewPage: The likelihood of a page being a review page, used for promoting/demoting high-quality/low-quality review pages.

These site-level and page-level quality signals provide insights into how Google assesses the overall quality and relevance of websites and individual web pages. They are used in various algorithms and components, such as Qstar, Panda, and BabyPanda, to promote high-quality content and demote low-quality or spammy pages.

Some of these signals are experimental and used for testing new ranking factors, while others are well-established and play a significant role in determining search rankings.

Google's Biggest Secrets Revealed

Based on the analysis of the leaked Google Content Warehouse API documentation, several significant revelations could be considered the biggest secrets:

1. Confirmation of Site Authority

Despite Google's public denials, the documentation confirms the use of a site-level authority metric called "siteAuthority." This indicates that Google does indeed assess the overall authority of a domain, which influences the rankings of individual pages within that domain.

2. Extensive Use of Click Data

The documentation reveals that Google heavily relies on click data (through the CRAPS system) to measure the relevance and popularity of URLs, hosts, and patterns. This contradicts Google's public statements downplaying the importance of click data in their ranking algorithms.

3. Existence of a Sandbox Mechanism

The presence of an attribute called "hostAge" suggests that Google employs a sandbox mechanism, where new or less trusted sites are isolated until they prove their value. This confirms a long-standing suspicion in the SEO community about the existence of a sandbox affecting new websites.

4. Utilization of Chrome Data

Contrary to Google's previous claims, the documentation reveals that data from the Chrome browser, such as page views, is used in ranking calculations. This indicates that Google leverages user behavior data from their browser to influence search rankings.

5. Demotion Signals and Penalties

The documentation sheds light on various demotion signals and penalties, such as exactMatchDomainDemotion, navDemotion, and anchorMismatchDemotion. These signals are used to demote pages and sites based on specific criteria, providing insights into Google's efforts to combat spam and low-quality content.

6. Experimental Signals and Live Experiments

The presence of experimental signals, such as experimentalQstarSignal and experimentalQstarDeltaSignal, highlights Google's continuous efforts to test and evaluate new ranking factors through live experiments. This reveals that Google is constantly refining their algorithms and exploring new ways to assess content quality and relevance.

7. Emphasis on Product Reviews

The documentation includes several signals related to product reviews, such as productReviewPPromotePage and productReviewPDemoteSite. This suggests that Google places significant importance on the quality and authenticity of product reviews, actively promoting or demoting pages and sites based on these factors.

8. Complexity and Scale of Ranking Factors

The sheer number of ranking factors (over 14,000 across 2,596 modules) mentioned in the documentation underscores the complexity and scale of Google's ranking algorithms. This revelation provides a glimpse into the intricate system Google has built to evaluate and rank web pages.

These secrets offer valuable insights into Google's inner workings and shed light on factors that SEOs and website owners have long speculated about.

The confirmation of site authority, the extensive use of click data, the existence of a sandbox, and the utilization of Chrome data are particularly significant, as they challenge Google's public statements and provide a more accurate picture of how the search engine operates behind the scenes.

However, it's essential to note that while these revelations are significant, they do not provide a complete understanding of Google's ranking algorithms, which likely involve many more factors and complex interactions not covered in the leaked documentation.